Table of Contents

Model-based testing of state machines

This article explains how sinelaboreRT helps you to test your state based software.

In 2000 Martin Gomez wrote on embedded.com: “The beauty of coding even simple algorithms as state machines is that the test plan almost writes itself. All you have to do is to go through every state transition. I usually do it with a highlighter in hand, crossing off the arrows on the state transition diagram as they successfully pass their tests. This is a good reason to avoid “hidden states”-they're more likely to escape testing than explicit states. Until you can use the “real” hardware to induce state changes, either do it with a source-level debugger, or build an “input poker” utility that lets you write the values of the inputs into your application.” In many teams this is still common practice.

On a more general view state machine testing covers the following steps: (1) building a (test) model, (2) generating test cases (3) generating expected outputs, (4) running the tests, (5) comparing actual outputs with expected outputs, and (6) deciding on further actions (e.g. whether to modify the model, generate more tests, or stop testing).

Model based testing usually means that the test cases and other required data (e.g. test stimuli or expected test results) are derived automatically from the state machine model. And often also the automated test execution and validation is associated with model based testing. Probably only few organizations have implemented a fully automated test system covering all these steps. Especially for smaller teams the required investment for tools etc. is out of budget.

This article explains the features of sinelaboreRT that are relevant for (model based) testing. We use I) the Equivalent PCLopen function block and II) the microwave oven from the manual throughout this article. Both examples are available for download and allows you to follow all steps in. As we use the same model to generate code and testcases from step one is already done.

Generating test cases

Defining testcases is the 2nd important step that usually consumes a lot of time if it must be done manually. The code generator can save you a lot of time by auto-matically suggesting test routes through a given state machine.

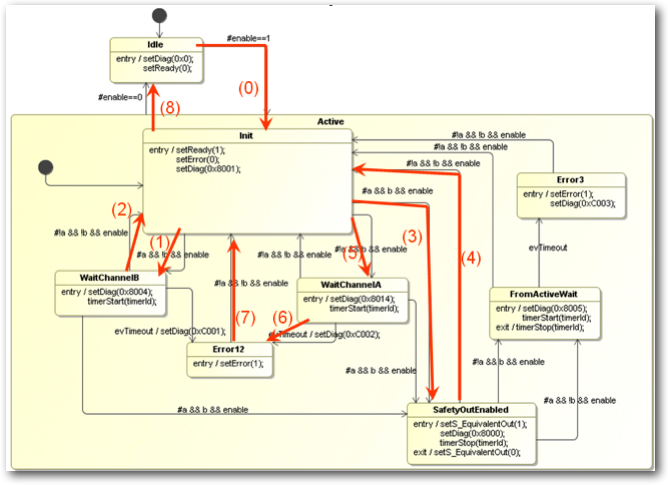

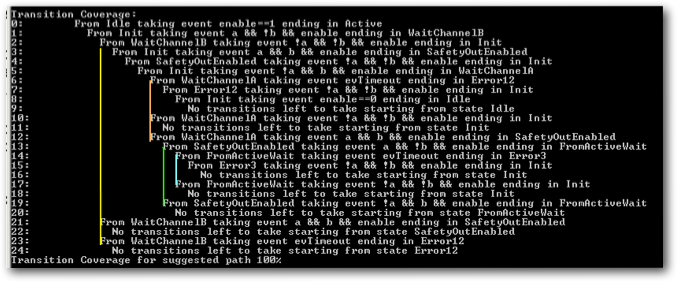

The used algorithm ensures that all transitions are taken at least once (100% transition coverage that also means 100% state coverage). So it is not anymore necessary to go through the diagram with a highlighter in hand. The following figure 1 shows the coverage information from the equivalent machine. The command line option '-c' switches on the coverage data generation. The following command generates code and test routes from a Magic Draw model.

java -jar codegen.jar -v -c -p md -o equivalent -t "Model:Equivalent" equivalent.xml

The output looks the following:

Figure 1: Coverage output generated from the code generator for the Safety Function Block state machine. The colored lines were added to better indicate the different routes.

You can see that the first test route starts from the initial state in line 0 and ends at line 9 where no untaken transitions are left to go (take a look in the state diagram below and follow the red arrows). The output moves to the right at every new transition on the route. Sometimes not all outgoing transitions of a state can be tested. In this case a branch in the test route is necessary. Take «WaitChannelB» as an example. As you can see not all transitions starting from WaitChannelB could be tested in one route. Two further branches are necessary. They are shown in line 21 and 23 (follow the yellow line) in figure 1. Branches are indicated by the same indentation level. Other branches in our example are marked with the red, green and blue lines.

SinelaboreRT can also generate an Excel sheet with all the test routes. More about this feature follows below.

Figure 2: State machine with test path from step 0 to 8 as suggested from the coverage algorithm shown in figure 1.

Expected outputs

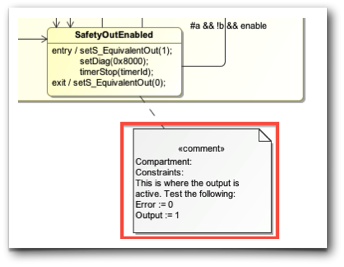

To assess the test results the expected behavior must be defined. SinelaboreRT can not determine output values from the transitions or guards. It is your responsibility to define the output variables of the state machine (either hardware or software). But if you embed the expected output values in the state machine the code generator can extract it for you automatically.

To be able to extract the outputs you have to define it as so called state constraints. The UML does not directly support this so you have to define a comment and attach it to a state. In the comment use the ‘Constraints:’ key-word to start the constraints section. The format of the specification is up to you. An example for the ‘SafetyOutputState’ is shown in the following figure 3. In this case it is stated that the error output must be zero whereas the normal output must be one.

Figure 3: Use a comment to specify a constraint per state.

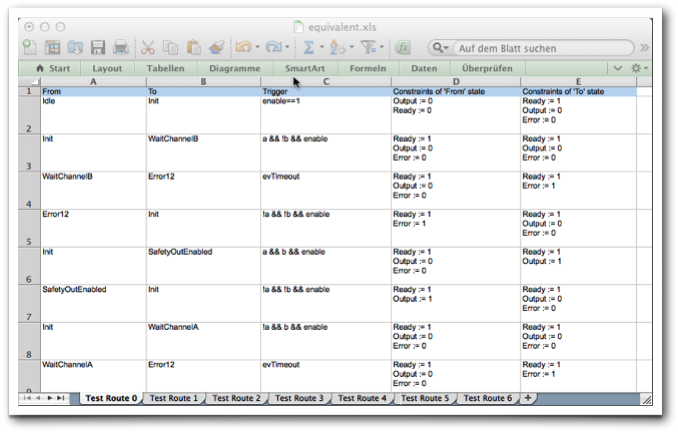

Beside the console output from above - which provides a quick overview about the test effort - an Excel file can be created. It contains one route per sheet. Each line in a sheet is a single test step on the test route starting from the init state. There are as many sheets as routes are necessary to achieve the 100% transition coverage. A sheet lists the present state and the trigger to reach the next state. In addition the constraints of the source and the target states are listed. The constraint information is taken from the state diagram if specified (see figure 3 above). The following figure 4 shows the sheet for the state diagram from figure 2.

Figure 4: Excel sheets with the test routes for the equivalent state machine.

Each line contains a test step. If constraint data is provided in the state diagram

(test oracle) it is added too. A tester can use this data as input for the test plan.

Figure 4: Excel sheets with the test routes for the equivalent state machine.

Each line contains a test step. If constraint data is provided in the state diagram

(test oracle) it is added too. A tester can use this data as input for the test plan.

Running tests

Test-beds are usually very hardware dependent in the embedded world. Therefore sinelaboreRT can’t generate a test-bed and the test case code for you. Using a unit test toolkit as described in [2] can be of great help here. To check that the machine is in the correct state you can instruct SinelaboreRT to generate trace code. Within the trace function you can send the trace data to a monitoring PC or store it e.g. for later analysis. This feature of SinelaboreRT is discussed in more detail in the second part of this article.

Comparing actual outputs and deciding on further actions

Comparison of the actual outputs with expected outputs, and the decision on further actions (e.g. whether to modify the model, generate more tests, or stop testing) is a manual step. SinelaboreRT does not directly support this work.

Summary

This part 1 of a 2 parts (-> 2nd part) article has discussed how to test your state machine model and how the SinelaboreRT code-generator does support you in creating test routes to reach 100% transition coverage. Part II of this article shows how SinelaboreRT helps trace the test execution and to visualize the actual status of your state machine and the actually reach coverage. If you want to read more about MBT look here.

Your feedback is very welcome!

Further readings

| Practical Model-Based Testing: A Tools Approach from Mark Utting and Bruno Legeard. Chapter 5 discusses “Testing from finite state machines”. |

| “Test-Driven Development for Embedded C” from James W. Grenning. It teaches you how to use the unit testing frameworks Unity and CppUTest to test embedded software. This book does not explicitly discuss state machine testing. Read a review here. |